WARNING: This blogpost is a first draft, and in some places is lacking in details, for which I apologize in advance. I expect that I will later be able to add some of those details after dong a little more research, but I have pretty much spent my entire day in one metaverse platform after another today, I am tired, I am achey, and I wanted to get some information out to all my readers as soon as possible while everything was still (relatively) fresh in my mind. Forgive any errors and omissions I have made!

In particular, despite a few organizational and technical issues, I received a highly favourable impression of Foretell Reality’s work, and I do hope to follow up with more of a deep-dive on that company sometime soon, as I have done with other metaverse platforms in the past.

And yes—in case you hadn’t noticed—this metaverse blogger is BACK! My self-imposed hiatus is OVER and I will again be writing regularly about social VR, virtual worlds, and the metaverse (with a side of generative AI, which I have been actively learning about since summertime).

Because of my workload, I was only able to attend one session of the IMMERSIVE X metaverse conference on Wednesday, November 12th:

- Conversational AI in Healthcare (held in Foretell Reality, which was a new-to-me platform).

However, I more than made up for it on Thursday, November 13th, attending the following five conference sessions:

- Private, Present & Fully Heard: How Virtual Reality is Reclaiming the Power of Anonymous Peer Support (held in Foretell Reality)

- Healing Beyond Walls: VR Social Support For Patients At SickKids (held in Foretell Reality)

- Immersive Learning Beyond the Classroom (held in ENGAGE)

- AI, WebXR and the Future of the Immersive Web (held in Hubs)

- Will AR Be The Big Immersive Breakthrough? (held in VRChat)

So I will briefly report on each of these six sessions, one by one.

I accessed the three sessions held in Foretell Reality using the Meta Quest 3 wireless headset at my workplace, and I entered the sessions in ENGAGE and VRChat using my PCVR setup at work, a Vive Pro 2 VR headset tethered to a Windows desktop PC with a fairly decent NVidia gaphics card.

The final session, held in Hubs (formerly Mozilla Hubs), I could have entered via virtual reality, but instead I opted to pay a visit via the flatscreen monitor on my trusty MacBook Pro! By the end of the day, my neck and shoulders were aching, but I did make it through.

Conversational AI in Healthcare

This was not the first time that I had seen artificial intelligence combined with social VR (the first time was a memorable conversation I had with an AI-enhanced toaster in the now-shuttered platform called Tivoli Cloud VR, back in January of 2021), this one had a more practical purpose: to use generative AI to power a diabetes counselor (played by an NPC avatar) who could hold a conversation with a real-life person who has questions after being newly-diagnosed with type II diabetes.

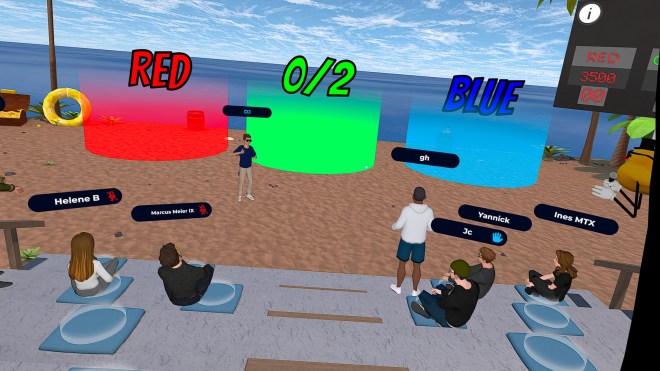

An initial discussion held in an open-air auditorium was followed by a group teleport to a lecture theatre where the embodied AI chatbot (a woman dressed in light blue, centre) held a conversation with a demonstrator (the woman named Ines MTX):

When I asked what generative AI system was being used to drive this demo, I was informed that Foretell Reality actually can use any of Google’s Gemini, OpenAI’s ChatGPT, or Anthropic’s Claude AI to generate responses. As somebody who was actually diagnosed with Type II diabetes during the recent pandemic, and who never had an opportunity to meet with a real-life diabetic coach, I would really have appreciated having something like this available!

Unfortunately, the conference session description was frustratingly short on concrete details: who the speakers were, what company (or companies) they represented (other than Foretell Reality), and who the actual client was. It was also not clear to me if this just a tech demo or an actual system used by real people. And, because I was in my Meta Quest 3 headset, I could not take any written notes as people were speaking. There was a company called MTX involved, as far as I can remember. This is an example of where an inadequate session description hampers my ability to report on the event itself, as impressive as the technology demo was.

Private, Present & Fully Heard: How Virtual Reality is Reclaiming the Power of Anonymous Peer Support

Unlike the previous day’s session, both sessions I attended in Foretell Reality were sterling examples of how social VR could be used as an effective solution to address real-world problems and issues, and provide tangible benefits.

First up, here’s the conference blurb about the NorthStar project:

In traditional Alcohol & Substance Use Disorder treatment spaces, anonymity is often promised but rarely provided. NorthStar’s groundbreaking VR platform redefines what true

anonymity can look like—and how it unlocks unparalleled honesty, vulnerability, and connection. This session explores how immersive, avatar-based peer support transforms treatment outcomes by allowing patients to show up fully without being seen, while feeling surrounded by a community. We’ll discuss how VR group therapy makes treatment more accessible, more private, and more powerful—meeting people where they are – literally – while protecting who they are.

Unfortunately, the representative from NorthStar was unable to be present at this session, but DJ from Foretell Reality still had plenty to show us, taking us on a sort of field trip through the various settings built by the company to facilitate NorthSatr’s virtual group meetings (based on Alcoholics Anonymous principles), such as an urban park where you could toss a stick and have one of several virtual dogs fetch it back to you:

Other locations included a chilly space station, where you could see your breath in front of you in the frosty air, and gravity could be turned off and on at will:

And finally, a newer addition, a competitive shooting game where you were part of team trying to shoot down rubber ducks of various colours! (I’m not sure if this last one was actually used by NorthStar clients, though).

Overall, and especially when combined with the following conference session I describe below, I came away with a very favourable impression of Foretell Reality. You can check out their website here.

Healing Beyond Walls: VR Social Support For Patients At SickKids

Another Foretell Reality client is Toronto, Ontario’s famous SickKids Hospital,where the conference blurb states:

Join us for a special fireside chat with Shaindy, Clinical Manager [of the] Child Life Program at SickKids Hospital in Canada and DJ Smith, Co-Founder and Chief Creative Officer of Foretell Reality. Together, they will share how virtual reality is transforming the way children facing serious illnesses connect, play, and support one another. Shaindy will discuss her groundbreaking program that allows kids to log in once a week to a virtual world for group sessions. DJ will highlight how Foretell Reality’s platform has powered successful clinical pilots and is now scaling to reach even more children. This conversation will explore the impact on patients and families, the power of hospital collaboration, and the future of immersive technology in pediatric care.

By “kids,” Shaindy explained that these were actually teenagers (aged 13 to 19) who were in hospital or a hospice, fighting various health-threatening conditions such as cancer. Because of their illnesses, these teenagers often found it difficult to socialize, which is where social VR afforded them an opportunity to interact and have fun virtually. Shaindy explained that they would get groups of six or so patients together, and they would keep it open and freeform so the “kids” could join or leave as they felt able to do so.

Among the many stories told were the delight by one patient who discovered a rubber ducky hiding in one of the virtual environment, which led to a quest to hide ducks (and pigs!) in as many environments as possible, for others to find. DJ helpfully rezzed one such duck for show-and-tell (also a pig, but I didn’t take a picture of that!). I apologize for the lopsided aspect of some of these screenshots; determining the right balance of your head in a VR headset when taking screenshots is a bit of a black art, at which I usually fail miserably!!

The presentation ended with a group teleport to a meditation centre, where Saindy led us through a box breathing exercise, helped along by the in-world painting tools installed by Foretell Reality!

This was one of the most heartwarming conference sessions I have ever attended, and I wish this project every success as they hope to expand this service to more hospitals in future!

Immersive Learning Beyond the Classroom

This session had a capacity crowd of avatars present, and was held in ENGAGE (in fact, there were so many avatars that my experience began to degrade to the point where I eventually had to bail out of my Vive Pro 2 VR headset or risk nausea!). Because of that, I missed about the final third of the talk. Here’s the blurb:

How can immersive environments transform teaching, learning, and cross-cultural connection? This panel brings together diverse perspectives from the fields of education and innovation.

Chris Madsen empowers organizations worldwide through the ENGAGE XR platform. Wolf Arne Storm and his team at the Goethe-Institut created GoetheVRsum, which explores new formats in culture, language, and creativity. Marlene May researches and teaches in 3D virtual spaces at Karlshochschule International University and Birgit Giering is pioneering the large-scale adoption of XR in schools of North Rhine-Westphalia. Moderated by Prof. Dr. Dr. Björn Bohnenkamp, this session will explore the future of learning beyond traditional classrooms.

However, this time I was able to take some chicken scratch handwritten notes! So here goes…Wolf-Arne spoke about the Goethe Institut, Marlene spoke about the Karlshochschule International University (in fact, the space where we met in ENGAGE was one of their creations), and Birgit spoke her work in the schools of North Rhine-Westphalia.

The Goethe Institut is Germany’s premier cultural institute, with locations around the world teaching German language and culture. The organization chose ENGAGE as their metaverse platform, creating a virtual space called the Goethevrsum. The Goethevrsum uses the works of various Bauhaus artists as inspiration for its design.

It was a shame that technical glitches kinda marred the overall experience for me, but I am glad that I was able to be able to make it in, and make it through most of it!

AI, WebXR and the Future of the Immersive Web

This session was held in (formerly Mozilla) Hubs, and much like all Hubs experiences I have ever had, it tended towards the spontaneous, the off-the-cuff and the chaotic! Like the ENGAGE session, it was unfortunately plagued by technical issues and problems. The presenter, Adam Filandr, talked about how he used open-source WebXR code and generative AI tools to create something called NeoFables, which delivered personalized worlds, characters, and storytelling (currently limited to 2D images, although he hopes to be able to expand it over time to create 3D content).

He discussed the advantages and disadvantages of using WebXR to create VR content, and gave a couple of examples of bigger-name projects which were based on WebXR (Wol, made by Google to provide information about the U.S. national parks system, and Raw Emotion Unites Us, about Paralympian athletes). It was interesting to hear a developer’s perspective of using WebXR to create content, mixed in with generative AI tools, however.

Will AR Be The Big Immersive Breakthrough (Heather Dunaway Smith and Lien Tran)

My final session on Thursday, Nov. 13th was not what I expected. It was a panel discussion with two musicians and artists, Lien and Heather, who have worked extensively with augmented reality and mixed reality. They shared samples of their work, and the panel (moderated by Christopher Morrison) held a wide-ranging discussion on how AR/MR/XR (or, as Chris said it, “XR-poly”) is impacting and transforming creative expression. I’m not sure if there will be a livestream of this talk (I did not see Carlos and his video camera while I was there), so I will leave it at that, since (again), I did not take written notes.